Well, let’s see…

Aljazeera

Man, this is taking it’s sweet time loading – went, made coffee, came back… What is this, am I back on dialup?

crappy fixed width layout that isn’t even 1024 friendly (funny since they’re over by what? 16-24px?!?), absurdly undersized fixed metric fonts on several elements… WHAT headings (oh, this doesn’t bode well)… wow, they don’t even use headings… or paragraphs…

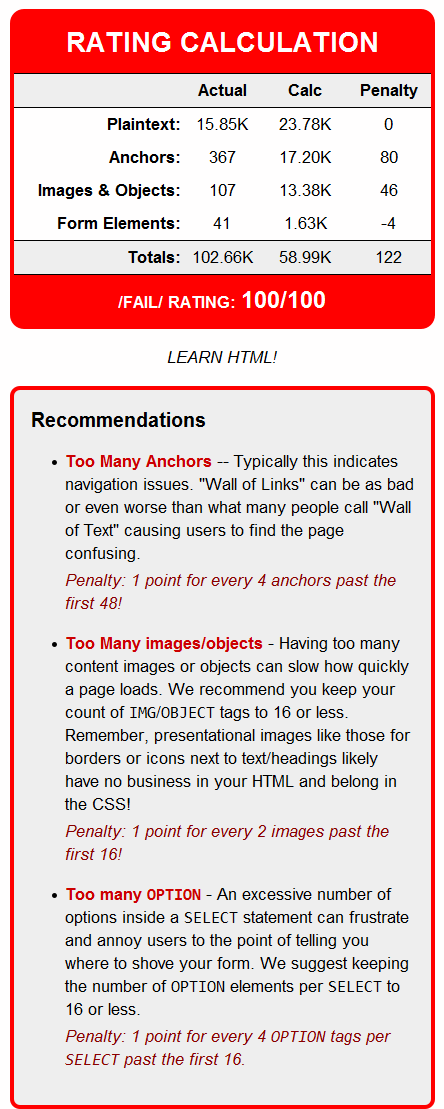

Though really, all we need to say about it can be summed up right here:

<table border="0" width="100%" cellspacing="0" cellpadding="0">

<tr>

<td class="mainMenuBG" >

<div class="dvMainMenu-left">

<span id="SiteMenu1_lblMenuLevel1"><table border="0" cellspacing="0" cellpadding="0"><tr class='mainMenuBG'>

<td id='td200779101832373555' class='mainMenuActive'><div id='divMenu200779101832373555'><a id='lnkMenu200779101832373555' class='mainMenuActive' href="/" target="_parent">News</a></div></td>

WOW.

So… tables for nothing, invalid document structures, invalid nesting of block level inside inlines, endless ID for nothing, endless class for nothing, and a total lack of anything remotely resembling semantic markup. Welcome to 1995. Also love the illegible orange on white – such wonderful usability that.

So… how’s the markup it hold up?

Plaintext: 6.35k * 1.5 = 9.53k

Images/Objects: 60 * 200 = 12k

(***** slap, oh snap – no wonder it takes forever))

Form Elements: 4 * 100 = 0.4k

Ideal Total: 24k

Their Total: 157k

Deathshadow Rating: 3/100 (*15/100)

- -12 points for high image count

Normally the code size would be 15/100, but I take one point off for every two objects/images past the first 48. It’s such a train wreck of useless tiny impossible to see thumbnails you can’t find any content, so the scoring is adjusted accordingly.

To be fair, let’s look at some non-mainstream news sources… Like let’s say one I actually frequent.

OSNews

Crappy little stripe with crappy fixed metric fonts, when visiting the site I use a user.css to override it as I’ve been following that site for over a decade.

Plaintext: 18.7k * 1.5 == 28.05k

Images/Objects: 29 * 200 = 5.8k

Form Elements: 4 * 100 = 0.4k

Ideal Total: 36k

Their Total: 63.8k

Deathshadow Rating: 56/100

How about the legend itself – geeky news at it’s best?

SLASHDOT

Slashdot is kind of sad – six years ago, they were the poster child of accessible websites. Now? They’ve gone to the retard PX metric fonts taking anything that was good about the site (fully fluid, simple layout) and flushing it. I have to zoom in 50% to even make the page usable. (and that’s gonna cost them on my rating)

So… hows’ the code hold up?

Plaintext: 15.5k * 1.5 == 23.25k

Images/Objects: 23 * 200 == 4.6k

Form Elements: 11 * 100 == 1.1k

Ideal Total: 31k

Their Total: 107k

Deathshadow Rating: 28/100

Not so good.

I know, how about

SitePoint – the actual home page.

Crappy fixed width (shocking for a web dev site in the age of media queries), absurdly undersized fixed metric fonts abound, and in a few places the light blue for links has legibility issues.

Plaintext: 6.63k * 1.5 == 9.95k

Images/Objects: 30 * 200 == 6k

Form Elements: 2 * 100 == 0.2k

Ideal Total: 18k

Their Total: 56.8k

Deathshadow Rating: 32/100

Now, to be fair, how about a site I wrote seven years ago before I knew better on ANY of this stuff? Could be good for a laugh.

ClassicBattletech.com

Fixed metric fonts because of some scripting that I should never have let them shove down my throat, and is responsible for the page being very broken which is why the next-gen version is in the wings. I don’t rank the current layout very high because it’s a combination of web-rot and trying to make the website match one of their print products – at a time where I wasn’t actually qualified to do the job right. That’s why it’s a fun example, we all start somewhere.

plaintext: 30.1k * 1.5 == 45.15k

images/objects: 13 * 200 == 2.6k

Form elements: 0

Ideal Total: 50k.

My total: 61.5k

Deathshadow Rating: 81/100

… and that’s before I stopped using tables for layout, stopped throwing scripting at things that were just going to break, before I figured out how to use heading tags properly, was still vomiting up Tranny, was still using javascript INLINED using onmouseover/onmouseout to handle rollovers, was slapping endless classes on everything for no good reason, and on the whole was still basically practicing old-fashioned markup practices.

WHAT THE DEVIL are people coding out there?!? But then there’s a reason I often say when reviewing people’s sites “You’ve got 100 to 200k of HTML doing 20k or less’ job!”

For the curious, this is the WIP template for the home page of the new version of the site.

CBT Revision 4 Template

Which let’s review that… dynamic fonts, dynamic widths, scripted assisting for width targets as well as media queries. Zero color contrast issues unless you count the rollover logos (which are just fine once hovered).

plaintext: 4.43k * 1.5 == 6.65k

(thanks to no content cloaking for scripting asshattery)

images/objects: 22 * 200 == 4.4k

form elements: 2 * 100 == 0.2k

ideal Total: 11k

my total: 13k

Deathshadow rating: 90/100*

(* five point bonus for fluid layout with dynamic fonts on content areas)

Yes, I’m such an ass I won’t even give my own work 100 out of 100… that’s how you make your work BETTER.

– edit – oh, all these numbers are AFTER running an ad-block… with adverts it’s FAR, FAR worse for many of the sites reviewed. Images/Objects also only counts CONTENT images, aka ones that use the IMG tag and not ones applied via CSS since those are presentation unless they are image replacements that require several extra hooks for animations (hover items) – any other images have NO MALFING BUSINESS in the markup.